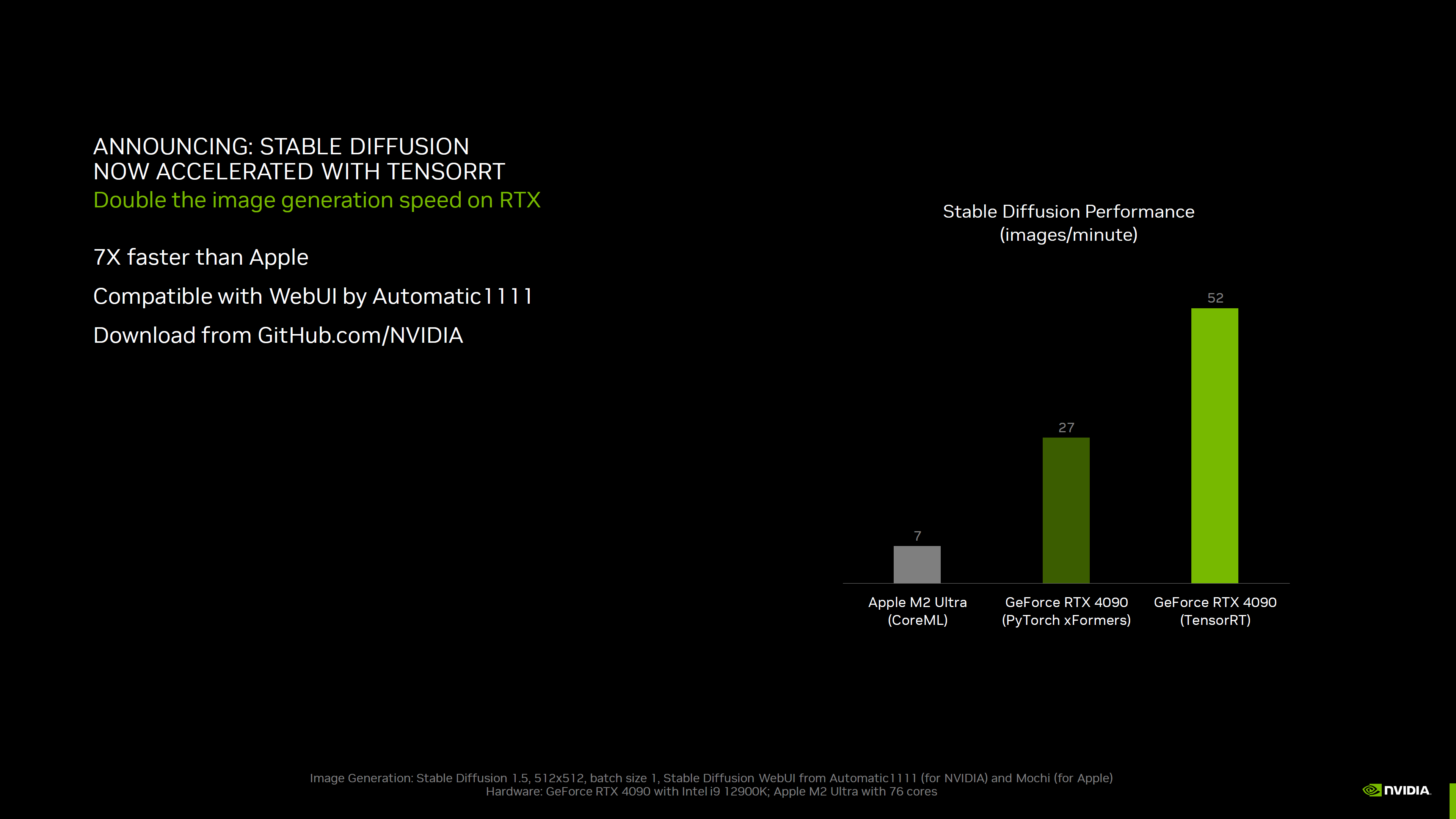

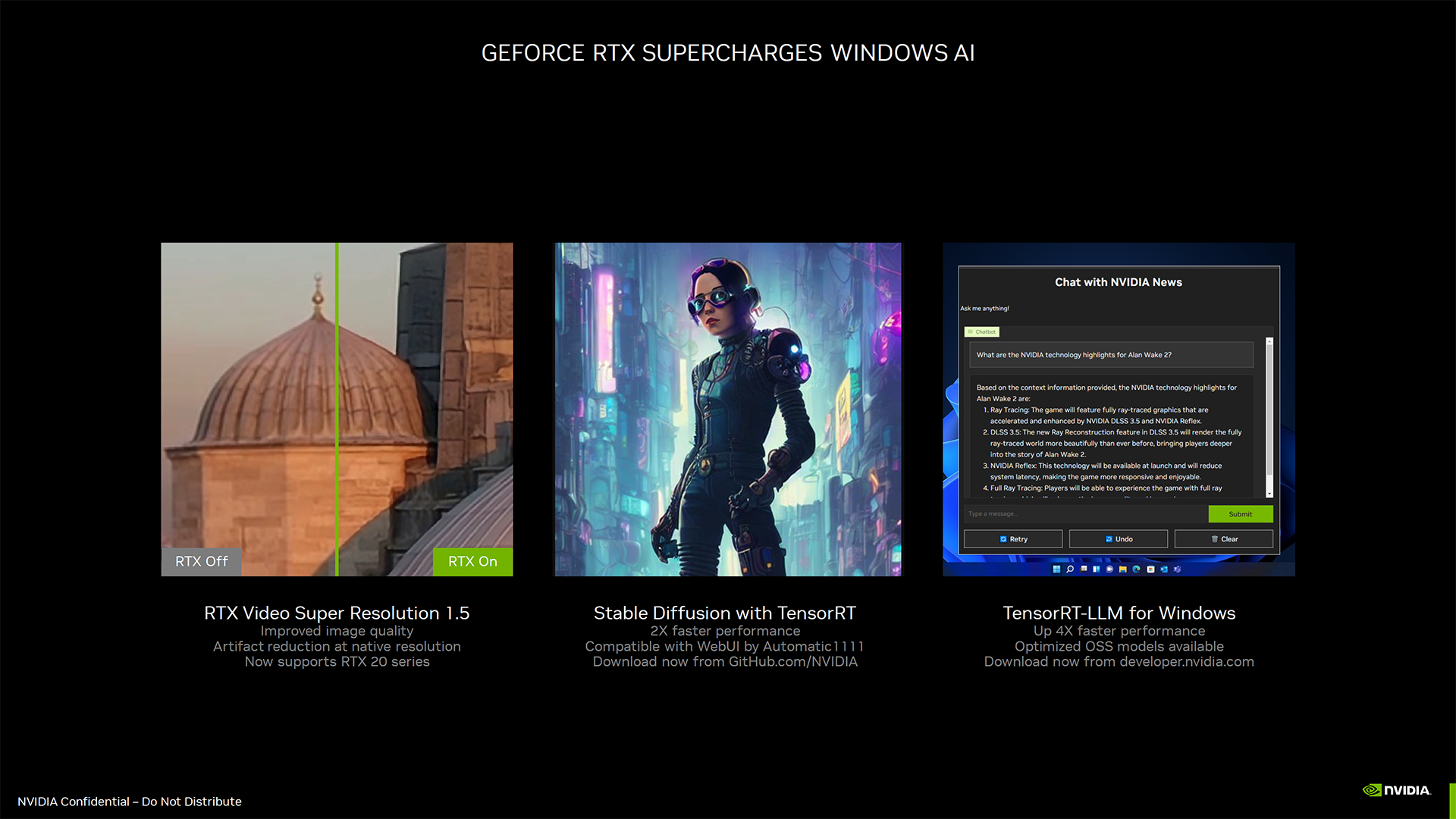

Nvidia has been busy working on further improvements to its suite of AI/ML (Artificial Intelligence/Machine Learning) and LLM (Large Language Model) tools. The latest addition is TensorRT and TensorRT-LLM, designed to optimize performance of consumer GPUs and many of the best graphics cards for running tasks like Stable Diffusion and Llama 2 text generation. We’ve tested some of Nvidia’s latest GPUs using TensorRT and found performance in Stable Diffusion was improved by up to 70%. TensorRT should be available for download at Nvidia’s Github page now, though we had early access for purposes of this initial look.

We’ve seen a lot of movement in Stable Diffusion over the past year or so. Our first look used Automatic1111’s webui, which initially only had support for Nvidia GPUs under Windows. Since then, the number of forks and alternative text to image AI generation tools has exploded, and both AMD and Intel have released more finely tuned libraries that have closed the gap somewhat with Nvidia’s performance. You can see our latest roundup of Stable Diffusion benchmarks in our AMD RX 7800 XT and RX 7700 XT reviews. Now, Nvidia is ready to widen the gap again with TensorRT.

The basic idea is similar to what AMD and Intel have already done. Leveraging ONNX, an open format for AI and ML models and operators, the base Hugging Face stable diffusion model gets converted into an ONNX format. From there, you can further optimize performance for the specific GPU that you’re using. It takes a few minutes (or sometimes more) for TensorRT to tune things, but once complete, you should get a substantial boost in performance along with better memory utilization.

We’ve run all of Nvidia’s latest RTX 40-series GPUs through the tuning process (each has to be done separately for optimal performance), along with testing the base Stable Diffusion performance and performance using Xformers. We’re not quite ready for the full update comparing AMD, Intel, and Nvidia performance in Stable Diffusion, as we’re retesting a bunch of additional GPUs using the latest optimized tools, so this initial look is focused only on Nvidia GPUs. We’ve included one RTX 30-series (RTX 3090) and one RTX 20-series (RTX 2080 Ti) to show how the TensorRT gains apply to all of Nvidia’s RTX line.

Each of the above galleries, at 512×512 and 768×768, uses the Stable Diffusion 1.5 models. We’ve “regressed” to using 1.5 instead of 2.1, as the creator community generally prefers the results of 1.5, though the results should be roughly the same with the newer models. For each GPU, we ran different batch sizes and batch counts to find the optimal throughput, generating 24 images total per run. Then we averaged the throughput of three separate runs to determine the overall rate — so 72 images total were generated for each model format and GPU (not counting discarded runs).

Various factors come into play with the overall throughput. GPU compute matters quite a bit, as does memory bandwidth. VRAM capacity tends to be a lesser factor, other than potentially allowing for larger image resolution targets or batch sizes — there are things you can do with 24GB of VRAM that won’t be possible with 8GB, in other words. L2 cache sizes may also factor in, though we didn’t attempt to model this directly. What we can say is that the 4060 Ti 16GB and 8GB cards, which are basically the same specs (slightly different clocks due to the custom model on the 16GB), had nearly identical performance and optimal batch sizes.

There are some modest differences in relative performance, depending on the model format used. The base model is the slowest, with Xformers boosting performance by anywhere from 30–80 percent for 512×512 images, and 40–100 percent for 768×768 images. TensorRT then boosts performance an additional 50~65 percent at 512×512, and 45~70 percent at 768×768.

What’s interesting is that the smallest gains (of the GPUs tested so far) come from the RTX 3090. It’s not exactly clear what the limiting factor might be, though we’ll have to test additional GPUs to come to any firm conclusions. The RTX 40-series has fourth generation Tensor cores, RTX 30-series has third generation Tensor cores, and RTX 20-series has second gen Tensor cores (with the Volta architecture being first gen Tensor). Newer architectures should be more capable, in other words, though for the sort of work required in Stable Diffusion it mostly seems to build down to raw compute and memory bandwidth.

We’re not trying to make this the full Nvidia versus the world performance comparison, but updated testing of the RX 7900 XTX as an example tops out at around 18~19 images per minute for 512×512, and around five images per minute at 768×768. We’re working on full testing of the AMD GPUs with the latest Automatic1111 DirectML branch, and we’ll have an updated Stable Diffusion compendium once that’s complete. Note also that Intel’s Arc A770 manages 15.5 images/min at 512×512, and 4.7 images/min at 768×768.

So what’s going on exactly with TensorRT that it can improve performance so much? I spoke with Nvidia about this topic, and it’s mostly about optimizing resources and model formats.

ONNX was originally developed by Facebook and Microsoft, but is an open source initiative based around the Apache License model. ONNX is designed to allow AI models to be used with a wide variety of backends: PyTorch, OpenVINO, DirectML, TensorRT, etc. ONNX allows for a common definition of different AI models, providing a computation graph model along with the necessary built-in operators and a set of standard data types. This allows the models to be easily transported between various AI acceleration frameworks.

TensorRT meanwhile is designed to be more performant on Nvidia GPUs. In order to take advantage of TensorRT, a developer would normally need to write their models directly into the format expected by TensorRT, or convert an existing model into that format. ONNX helps simplify this process, which is why it has been used by AMD (DirectML) and Intel (OpenVINO) for those tuned branches of Stable Diffusion.

Finally, one of the options with TensorRT is that you can also tune for an optimal path with a model. In our case, we’re doing batches of 512×512 and 768×768 images. The generic TensorRT model we generate may have dynamic image size of 512×512 to 1024×1024, with a batch size of one to eight, and an optimal configuration of 512×512 and batch size of 1. Doing 512×512 batches of 8 might end up being 10% slower, give or take. So we can do another TensorRT model that specifically targets 512x512x8, or 768x768x4, or whatever. And we did all of this to find the best configuration for each GPU.

AMD’s DirectML fork has some similar options, though right now there are some limitations we’ve encountered (we can’t do a batch size other than one, as an example). We anticipate further tuning of AMD and Intel models as well, though over time the gains are likely to taper off.

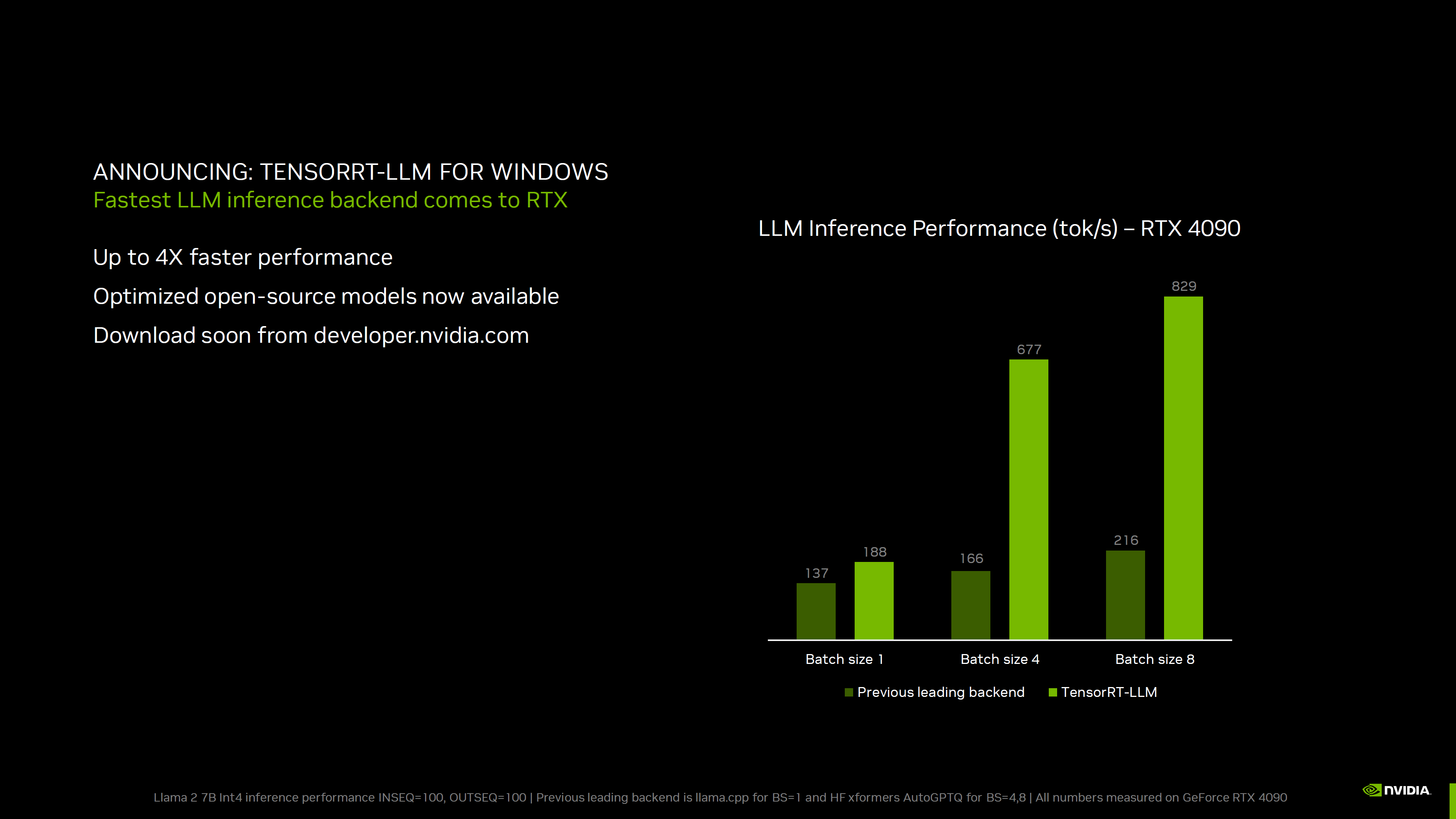

The TensorRT updated isn’t just for Stable Diffusion, of course. Nvidia shared the above slide detailing improvements it has measured with Llama 2 7B int4 inference, using TensorRT. That’s a text generation tool with seven billion parameters.

As the chart shows, generating a single batch of text shows a modest benefit, but the GPU in this case (RTX 4090) doesn’t appear to be getting a full workout. increasing the batch size to four boosts overall throughput by 3.6X, while a batch size of eight gives a 4.4X speedup. Larger batch sizes in this case can be used to generate multiple text responses, allowing the user to select the one they prefer — or even combine parts of the output, if that’s useful.

The TesorRT-LLM isn’t yet out but should be available on developer.nvidia.com (free registration required) in the near future.

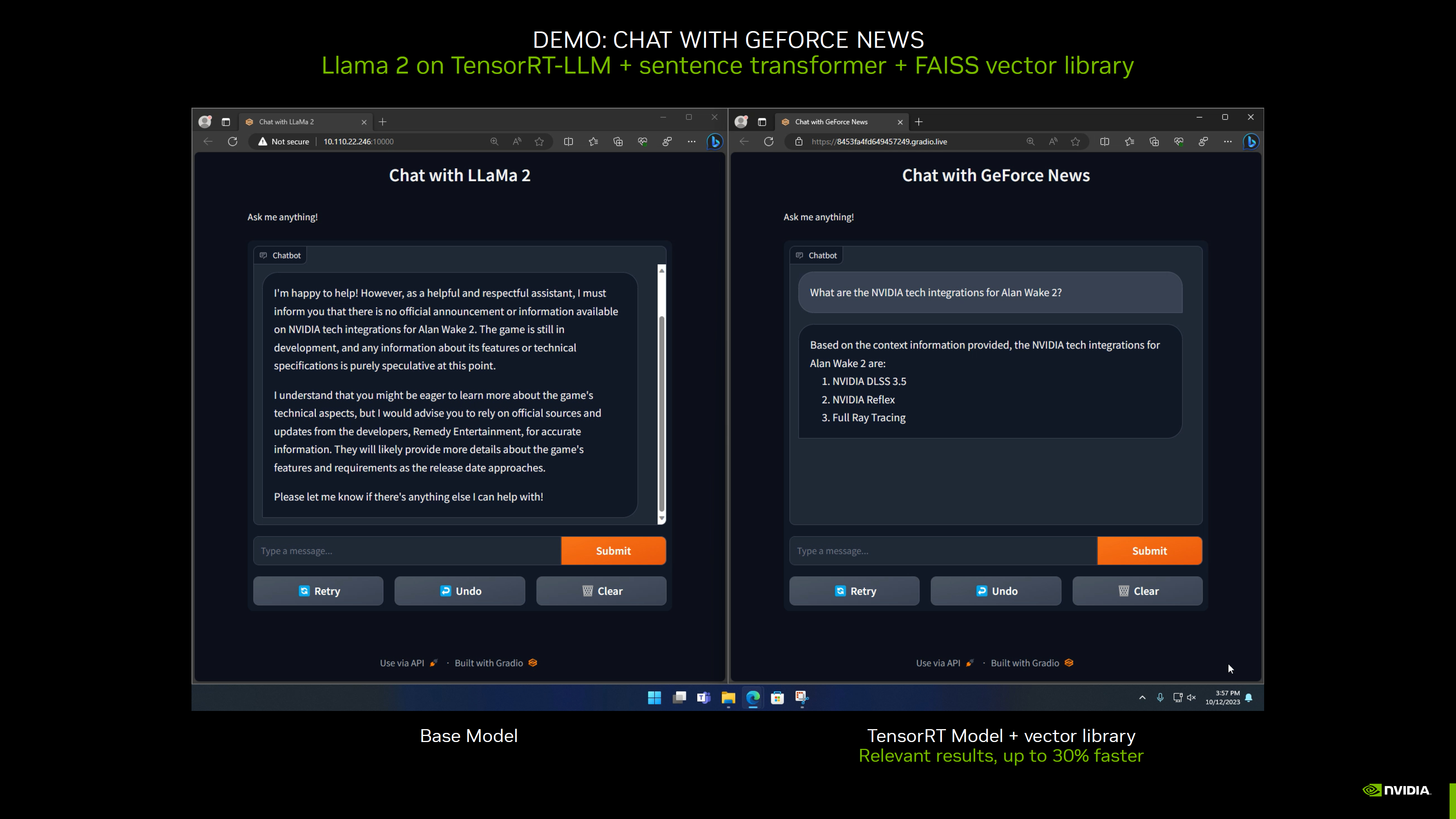

Finally, as part of its AI-focused updates for LLMs, Nvidia is also working on a TensorRT-LLM tool that will allow the use of Llama 2 as the base model, and then import local data for more domain specific and updated knowledge. As an example of what this can do, Nvidia imported 30 recent Nvidia news articles into the tool and you can see the difference in response between the base Llama 2 model and the model with this local data.

The base model provides all the information about how to generate meaningful sentences and such, but it has no knowledge of recent events or announcements. In this case, it things Alan Wake 2 doesn’t have any official information released. With the updated local data, however, it’s able to provide a more meaningful response.

Another example Nvidia gave was using such local data with your own email or chat history. Then you could ask it things like, “What movie were Chris and I talking about last year?” and it would be able to provide an answer. It’s potentially a smarter search option, using your own information.

We can’t help but see this as a potential use case for our own HammerBot, though we’ll have to see if it’s usable on our particular servers (since it needs an RTX card). As with all LLMs, the results can be a bit variable in quality depending on the training data and the questions you ask.

Nvidia also announced updates to it’s Video Super Resolution, now with support for RTX 20-series GPUs and native artifact reduction. The latter means if you’re watching a 1080p stream on a 1080p monitor, VSR can still help with noise removal and image enhancement. VSR 1.5 is available with Nvidia’s latest drivers.