Vectara has published an AI hallucination leaderboard that ranks various leading AI chatbots according to their ability to not ‘hallucinate.’ It’s obviously designed to highlight the extent to which the various public large language models (LLMs) hallucinate, but what does this mean, why is it important, and how is it being measured?

One of the characteristics of AI chatbots we have become wary of is their tendency to ‘hallucinate’ — to make up facts to fill in gaps. A highly public example of this was when law firm Levidow, Levidow & Oberman got in trouble after they “submitted non-existent judicial opinions with fake quotes and citations created by the artificial intelligence tool ChatGPT.” It was noted that made-up legal decisions such as Martinez v. Delta Air Lines have some traits consistent with actual judicial decisions, but closer scrutiny revealed portions of “gibberish.”

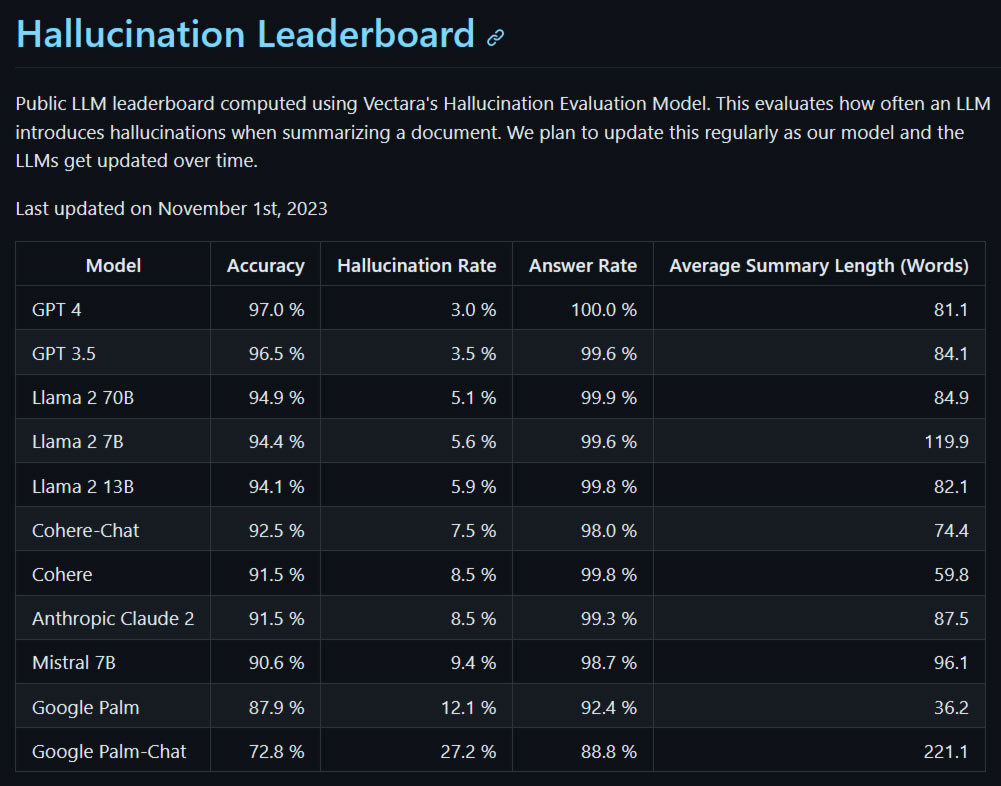

If you think about the potential use of LLMs in areas such as health, industry, defense, and so on, it’s clearly imperative to stamp out AI hallucinations as part of any ongoing development. To observe a practical example of an AI hallucinating under controlled reference circumstances, Vectara decided to run some tests with eleven public LLMs:

- Feed the LLMs a stack of over 800 short reference documents.

- Ask the LLMs to provide factual summaries of the documents, as directed by a standard prompt.

- Feed the answers to a model that detects the introduction of data that wasn’t contained in the source(s).

The query prompt used was as follows: You are a chat bot answering questions using data. You must stick to the answers provided solely by the text in the passage provided. You are asked the question ‘Provide a concise summary of the following passage, covering the core pieces of information described.’

The leaderboard will be updated periodically, to keep pace with the refinement of existing LLMs and the introduction of new and improved ones. For now, the initial data from Vectara’s Hallucination Evaluation Model shows how the LLMs stand.

GPT-4 did the best with the lowest hallucination rate and highest accuracy — we have to wonder if it could have kept Levidow, Levidow & Oberman out of trouble. At the other end of the table, two Google LLMs fared much worse. A hallucination rate of over 27% for Google Palm-Chat suggests that its factual summaries of reference material are judged unreliable at best. Palm-Chat’s responses appear to be thoroughly littered with hallucinatory debris using Vectara’s measurements.

In the FAQ section of its GitHub page, Vectara explains that it chose to use a model to evaluate the respective LLMs due to considerations such as the scale of the testing and consistency of assessment. It also asserts that “building a model for detecting hallucinations is much easier than building a model that is free of hallucinations.”

The table as it stands today has already caused some heated discussion on social media. It could also develop into a useful reference or benchmark that people wishing to use LLMs for serious — noncreative — tasks will look at closely.

In the meantime, we look forward to Elon Musk’s recently announced Grok getting measured by this AI Hallucination Evaluation Model yardstick. The chatbot launched in beta form 10 days ago with an obvious catchall excuse for inaccuracy and related blunders, with its creators describing Grok as humorous and sarcastic. Perhaps that’s fitting if Grok wants a job crafting social media posts.