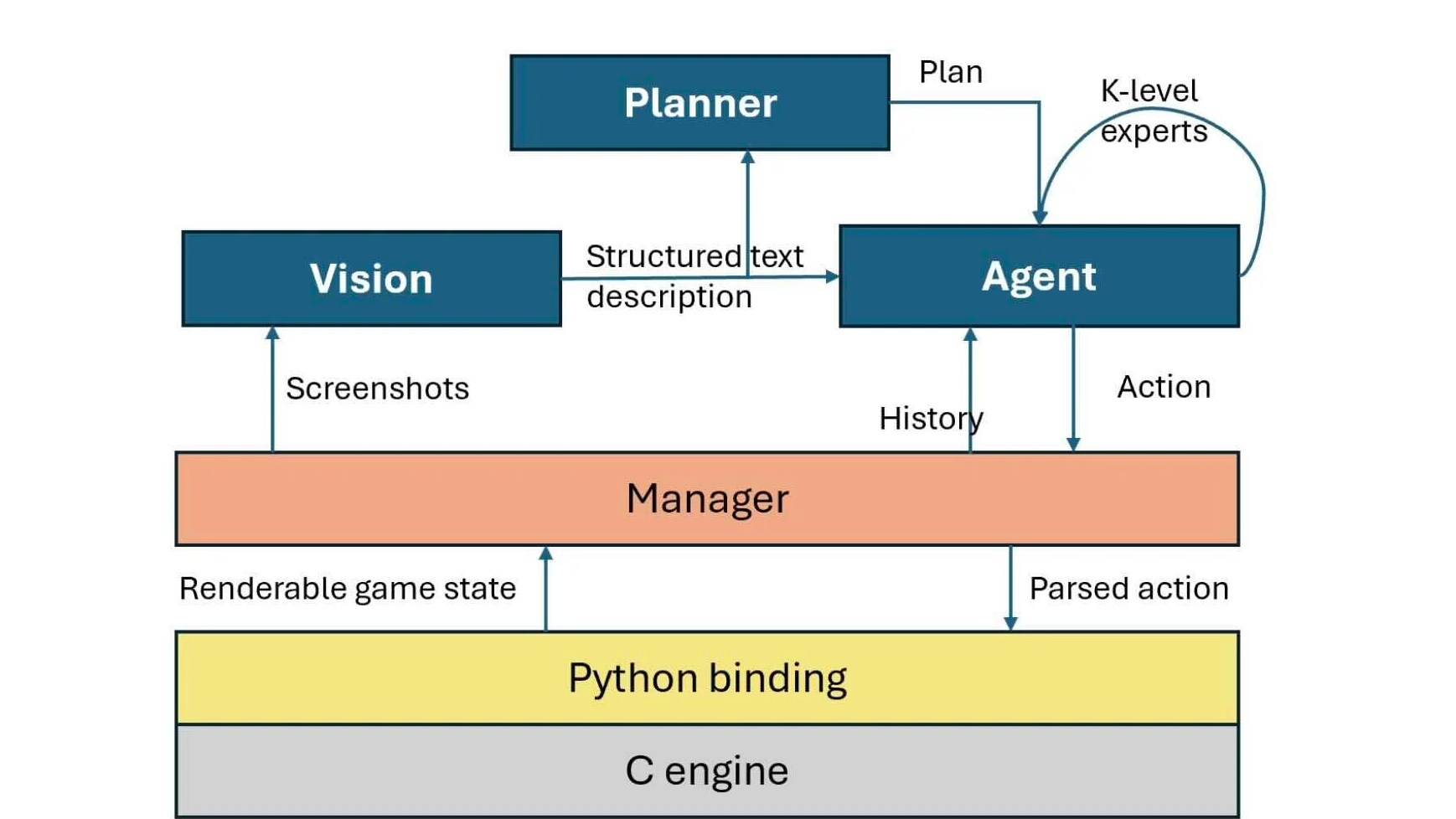

Microsoft scientist and University of York researcher Adrian de Wynter recently decided to put GPT-4 to the test, running Doom without prior training, reports The Register. The results conclude that GPT-4 can play Doom with some degree of efficacy without prior training, though the setup requires a GPT-4V interface for AI vision and the addition of Matplotlib and Python to make AI inputs possible.

According to the original paper, the GPT-4 AI playing Doom can open doors, fight enemies, and fire weapons. The basic gameplay functionality, including navigating the world, is pretty much all there. However, the AI also has no sense of object permanence to speak of — as soon as an enemy is offscreen, the AI is no longer aware of it at all. Even though instructions are included for what to do if an offscreen enemy is causing damage, the model is unable to react to anything outside of its narrow scope.

When speaking of his research’s ethical findings, De Wynter remarks, “It is quite worrisome how easy it was for me to build code to get the model to shoot something and for the model to accurately shoot something without actually second-guessing the instructions.”

He continues, “So, while this is a very interesting exploration around planning and reasoning, and could have applications in automated video game testing, it is quite obvious that this model is not aware of what it is doing. I strongly urge everyone to think about what deployment of these models implies for society and their potential misuse.”

He’s not wrong, of course: The cutting-edge of weapons technology gets scarier every year, and AI has already seen frightening growth and economic impact elsewhere. Way back in the 90s, IBM’s supercomputer-powered Deep Blue famously defeated Chess Grandmaster Garry Kasparov. Now we have a quick and dirty GPT-4V solution up and running through Doom, via the efforts of a single person. Imagine what might be done with a military behind such work.

Thankfully, we’re not yet producing kill-bots with aim and reasoning on par with the best Ultrakill and Doom players, much less the unstoppable force of the average T-800. For now, the main “AI assault” most people are worried about relates to generative AI and its ongoing impact, on both independent creatives and industry professionals alike.

But the fact that GPT-4 didn’t hesitate to shoot people-shaped objects is still concerning. This is as good a time as any to remind readers that Isaac Asimov’s “Laws of Robotics” dictating that robots shall not harm humans have always been fictional (not that they always worked). Hopefully those pursuing AI projects can find ways to properly codify protections for humanity into their models before Skynet comes online.