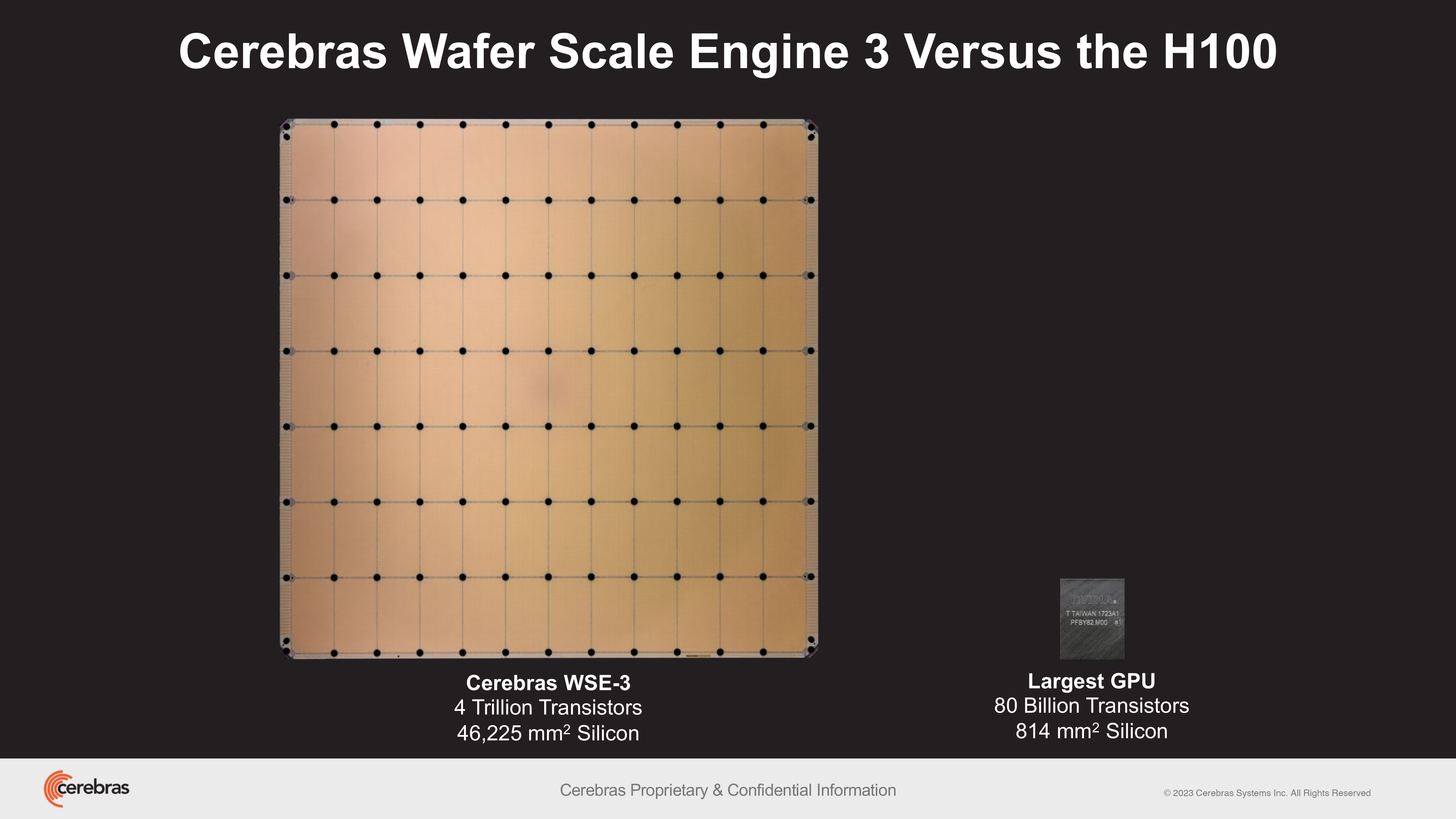

Cerebras Systems has unveiled its Wafer Scale Engine 3 (WSE-3), a breakthrough AI wafer-scale chip with double the performance of its predecessor, the WSE-2. This new device packs 4 trillion transistors made on TSMS’s 5nm-class fabrication process; 900,000 AI cores; 44GB of on-chip SRAM; and has a peak performance of 125 FP16 PetaFLOPS. Ceberas’s WSE-3 will be used to train some of the industry’s largest AI models.

The WSE-3 powers Cerebras’s CS-3 supercomputer, which can be used to train AI models with up to 24 trillion parameters — a significant leap over supercomputers powered by the WSE-2 and other modern AI processors. The supercomputer can support 1.5TB, 12TB, or 1.2PB of external memory, which allows it to store massive models in a single logical space without partitioning or refactoring — streamlining the training process and enhancing developer efficiency.

In terms of scalability, the CS-3 can be configured in clusters of up to 2048 systems. This scalability allows it to fine-tune 70 billion parameter models in just one day with a four-system setup, and to train a Llama 70B model from scratch in the same timeframe at full scale.

The latest Cerebras Software Framework offers native support for PyTorch 2.0 and also supports dynamic and unstructured sparsity, which can accelerate training — up to eight times faster than traditional methods.

Cerebras has emphasized the CS-3’s superior power efficiency and ease of use. Despite doubling its performance, the CS-3 maintains the same power consumption as its predecessor. It also simplifies the training of large language models (LLMs), requiring up to 97% less code compared to GPUs. For example, a GPT-3 sized model requires only 565 lines of code on the Cerebras platform, according to the company.

The company has already seen significant interest in the CS-3, and has a substantial backlog of orders from various sectors — including enterprise, government, and international clouds. Cerebras is also collaborating with institutions such as the Argonne National Laboratory and the Mayo Clinic, highlighting the CS-3’s potential in healthcare.

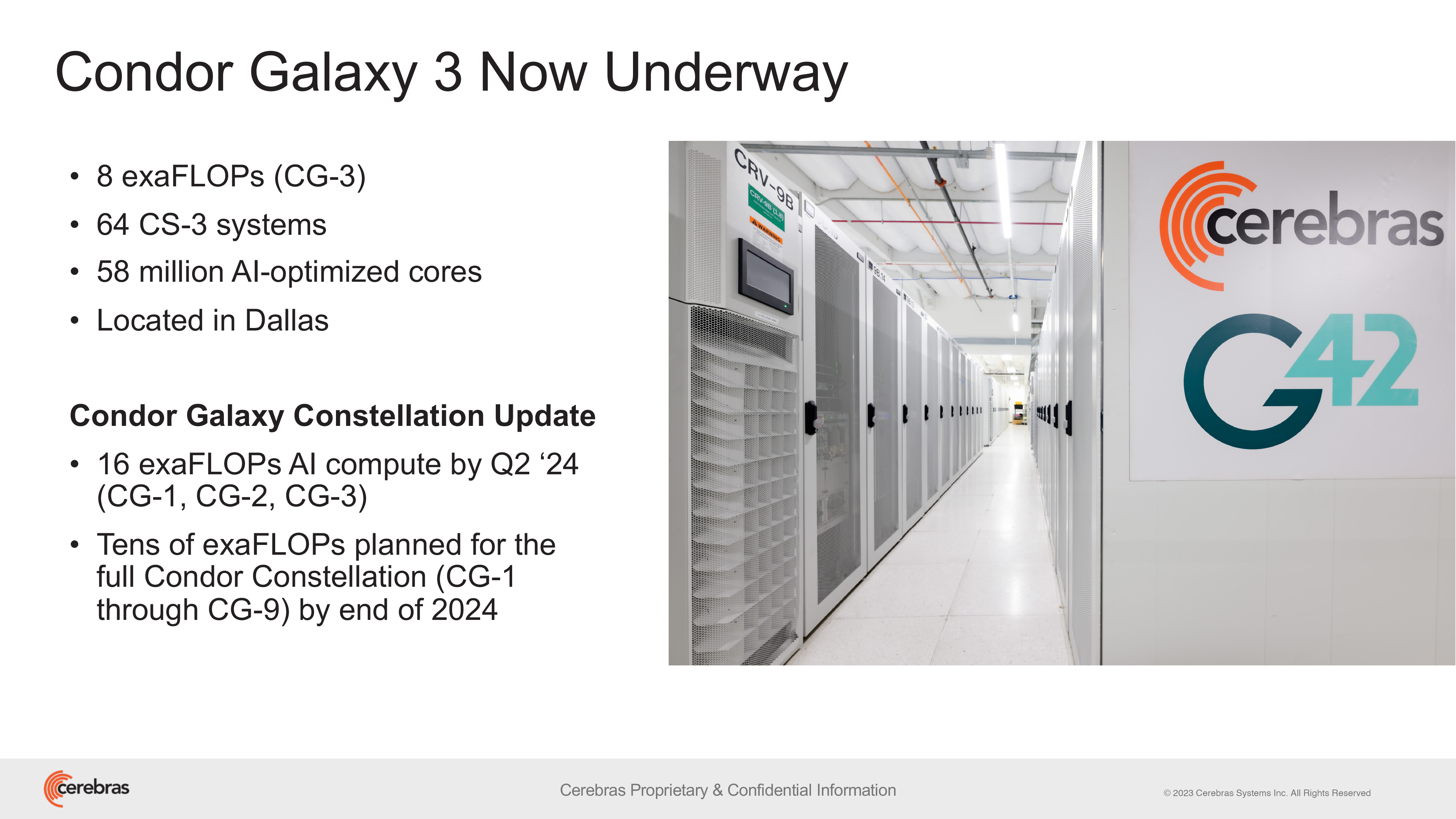

A strategic partnership between Cerebras and G42 is also set to expand with the construction of the Condor Galaxy 3, an AI supercomputer featuring 64 CS-3 systems (packing a whopping 57,600,000 cores). Together, the two companies have already created two of the biggest AI supercomputers in the world: the Condor Galaxy 1 (CG-1) and the Condor Galaxy 2 (CG-2), which are based in California and have a combined performance of 8 ExaFLOPs. This partnership aims to deliver tens of exaFLOPs of AI compute, globally.

“Our strategic partnership with Cerebras has been instrumental in propelling innovation at G42, and will contribute to the acceleration of the AI revolution on a global scale,” said Kiril Evtimov, Group CTO of G42. “Condor Galaxy 3, our next AI supercomputer boasting 8 exaFLOPs, is currently under construction and will soon bring our system’s total production of AI compute to 16 exaFLOPs.”