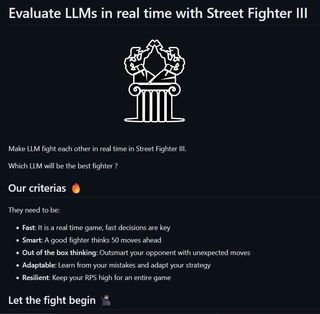

A new artificial intelligence (AI) benchmark based on the classic arcade title Street Fighter III was devised at the Mistral AI hackathon in San Francisco last week. The open-source LLM Colosseum benchmark was developed by Stan Girard and Quivr Brain. The game is running in an emulator, allows the LLMs to duke it out in unconventional yet spectacular fashion.

AI enthusiast Matthew Berman introduces the new beat-em-up-based large language model (LLM) tournament in the video embedded above. In addition to showcasing the street fighting action, Berman’s video walks you through installing this open-source project on a home PC or Mac, so you can test it for yourself.

This isn’t a typical LLM benchmark. Smaller models usually have a latency and speed advantage, which translates to winning more bouts in this game. Human beat-em-ups players benefit from fast reactions to counter moves by their opponents, and the same rings true in this AI-vs-AI action.

The LLMs are making real-time decisions as to how they fight. As text-based models, they have been prompted how to react to the game action after first analyzing the game state for context and then considering their move options. Move options include; move closer, move away, fireball, megapunch, hurricane, and megafireball.

In the video you can see that fights look fluid, and the players appear to be strategic with their countering, blocking, and use of special moves. However, at the time of writing the project only allows the use of the Ken character – which provides perfect balance, but might be less interesting to watch.

So, which is the best Street Fighter III AI? According to the tests undertaken by Girard, OpenAI’s GPT 3.5 Turbo is the appropriately named winner (ELO 1776) from the eight LLMs they pitted against each other. In a separate series of tests, by Amazon exec Banjo Obayomi, we saw 14 LLMs sparring across 314 individual matches with Anthropic’s claude_3_haiku ultimately triumphant (ELO 1613).

Interestingly, Banjo also observed that LLM bugs/features like AI hallucinations and AI safety rails sometimes got in the way of a particular model’s beat-em-up performance.

Last but not least, the question arises whether this is a useful benchmark for LLMs, or just an interesting distraction. More complex games could provide more rewarding insights, but results would probably be more difficult to interpret.