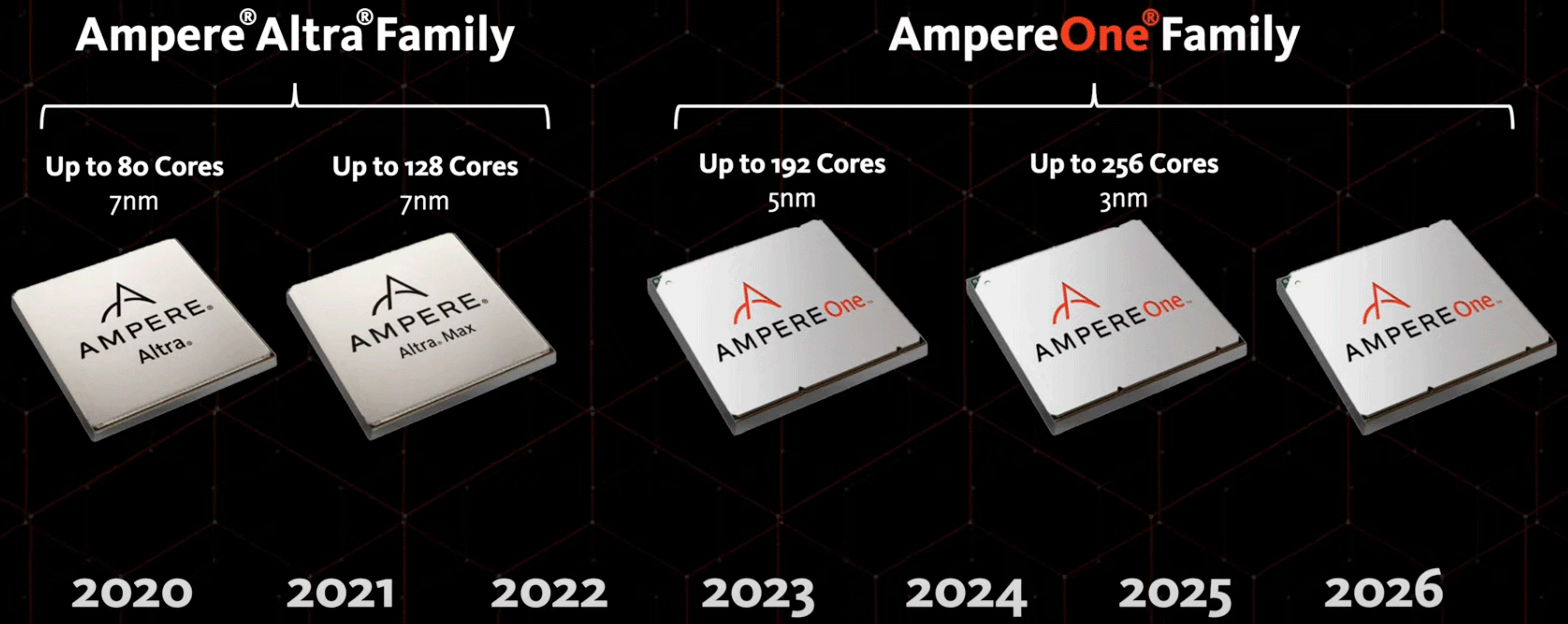

Ampere Computing today introduced its roadmap for the coming years, including new CPUs and collaborations with third parties. In particular, the company said it would launch its all-new 256-core AmpereOne processor next year, made on TSMC’s N3 process technology. Also, Ampere is teaming up with Qualcomm to build AI inference servers with the company’s accelerators. Apparently, Huawei is also looking at integrating third-party UCIe-compatible chiplets into its own platforms.

256-core CPUs incoming

Ampere has begun shipping 192-core AmpereOne processors with an eight-channel DDR5 memory subsystem it introduced a year ago. Later this year, the company plans to introduce 192-core AmpereOne CPUs with a 12-channel DDR5 memory subsystem, requiring a brand-new platform.

Next year, the company will use this platform for its 256-core AmpereOne CPU, which will be made using one of TSMC’s N3 fabrication processes. The company does not disclose whether the new processor will also feature a new microarchitecture, though it looks like it will continue to feature 2 MB of L2 cache per core.

“We are extending our product family to include a new 256-core product that delivers 40% more performance than any other CPU in the market,” said Renee James, chief executive of Ampere. “It is not just about cores. It is about what you can do with the platform. We have several new features that enable efficient performance, memory, caching, and AI compute.”

The company says that its 256-core CPU will use the same cooling system as its existing offerings, which implies that its thermal design power will remain in the 350-watt ballpark.

Teaming up with Qualcomm for AI servers

While Ampere can certainly address many general-purpose cloud instances, its capabilities for AI are fairly limited. The company itself says that its 128-core AmpereOne CPU with its two 128-bit vector units per core (and supporting INT8, INT16, FP16, and BFloat16 formats) can offer performance comparable to Nvidia’s A10 GPU, albeit at lower power. Ampere certainly needs something better to compete against Nvidia’s A100, H100, or B100/B200.

So, it teamed up with Qualcomm, and the two companies plan to build platforms for LLM inferencing based on Ampere’s CPUs and Qualcomm’s Cloud AI 100 Ultra accelerators. There is no word when the platform will be ready, but it demonstrates that Ampere’s ambitions do not end with general-purpose computing.

Chiplet plans

Last but not least, Ampere announced the formation of a UCIe working group within the AI Platform Alliance. The company intends to leverage the flexibility of its CPUs with the UCIe open interface technology and incorporate customer-developed IPs into future CPUs, which essentially enable it to build custom silicon for its clients.