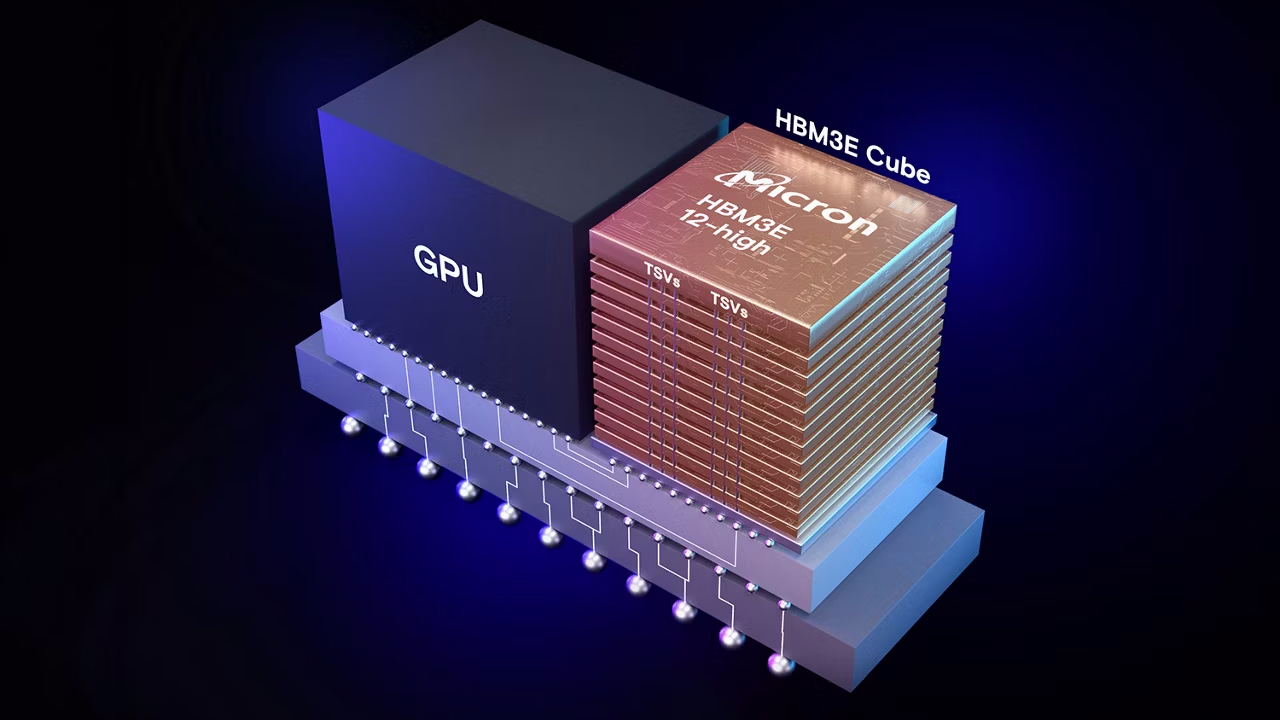

Micron formally announced its 12-Hi HBM3E memory stacks on Monday. The new products feature a 36 GB capacity and are aimed at leading-edge processors for AI and HPC workloads, such as Nvidia’s H200 and B100/B200 GPUs.

Micron’s 12-Hi HBM3E memory stacks boast a 36GB capacity, 50% more than the previous 8-Hi versions, which had 24 GB. This increased capacity allows data centers to run larger AI models, such as Llama 2, with up to 70 billion parameters on a single processor. This capability eliminates the need for frequent CPU offloading and reduces delays in communication between GPUs, speeding up data processing.

In terms of performance, Micron’s 12-Hi HBM3E stacks deliver over 1.2 TB/s of memory bandwidth with data transfer rates exceeding 9.2 Gb/s. According to the company, despite offering 50% higher memory capacity than competitors, Micron’s HBM3E consumes less power than 8-Hi HBM3E stacks.

Micron’s HBM3E 12-high includes a fully programmable memory built-in self-test (MBIST) system to ensure faster time-to-market and reliability for its customers. This technology can simulate system-level traffic at full speed, allowing for thorough testing and quicker validation of new systems.

Micron’s HBM3E memory devices are compatible with TSMC’s chip-on-wafer-on-substrate (CoWoS) packaging technology, widely used for packaging AI processors such as Nvidia’s H100 and H200.

“TSMC and Micron have enjoyed a long-term strategic partnership,” said Dan Kochpatcharin, head of the Ecosystem and Alliance Management Division at TSMC. “As part of the OIP ecosystem, we have worked closely to enable Micron’s HBM3E-based system and chip-on-wafer-on-substrate (CoWoS) packaging design to support our customer’s AI innovation.”

Micron is shipping production-ready 12-Hi HBM3E units to key industry partners for qualification testing throughout the AI ecosystem.

Micron is already developing its next-generation memory solutions, including HBM4 and HBM4E. These upcoming types of memory will continue to push the boundaries of memory performance, ensuring Micron remains a leader in meeting the growing demands for advanced memory used by AI processors, including Nvidia’s GPUs based on the Blackwell and Rubin architectures.