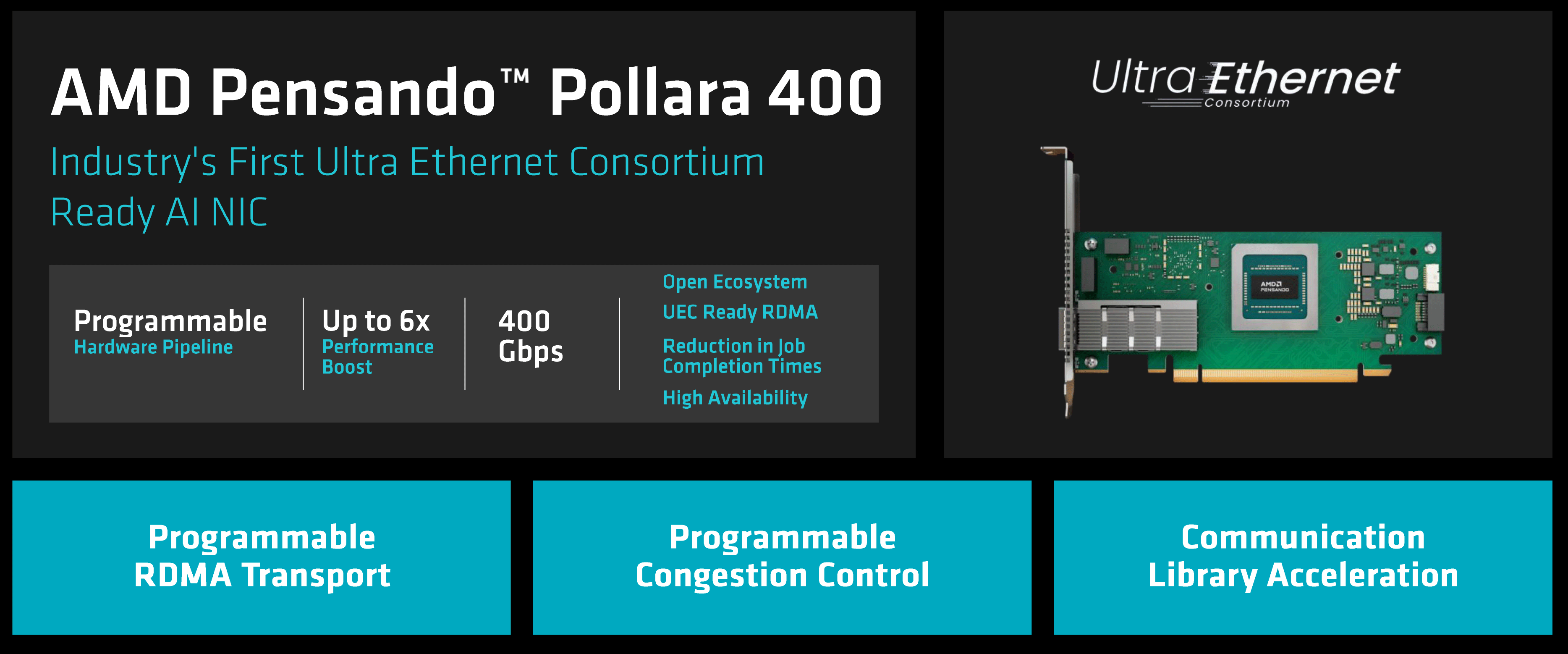

The Ultra Ethernet Consortium (UEC) has delayed release of the version 1.0 of specification from Q3 2024 to Q1 2025, but it looks like AMD is ready to announce an actual network interface card for AI datacenters that is ready to be deployed into Ultra Ethernet datacenters. The new unit is the AMD Pensando Pollara 400, which promises an up to six times performance boost for AI workloads.

The AMD Pensando Pollara 400 is a 400 GbE Ultra Ethernet card based on a processor designed by the company’s Pensando unit. The network processor features a processor with a programmable hardware pipeline, programmable RDMA transport, programmable congestion control, and communication library acceleration. The NIC will sample in the fourth quarter and will be commercially available in the first half of 2025, just after the Ultra Ethernet Consortium formally publishes the UEC 1.0 specification.

The AMD Pensando Pollara 400 AI NIC is designed to optimize AI and HPC networking through several advanced capabilities. One of its key features is intelligent multipathing, which dynamically distributes data packets across optimal routes, preventing network congestion and improving overall efficiency. The NIC also includes path-aware congestion control, which reroutes data away from temporarily congested paths to ensure continuous high-speed data flow.

Additionally, the Pollara 400 offers fast failover, quickly detecting and bypassing network failures to maintain uninterrupted GPU-to-GPU communication delivering robust performance while maximizing utilization of AI clusters and minimizing latency. These features promise to enhance the scalability and reliability of AI infrastructure, making it suitable for large-scale deployments.

The Ultra Ethernet Consortium now includes 97 members, up from 55 in March, 2024. The UEC 1.0 specification is designed to scale the ubiquitous Ethernet technology in terms of performance and features for AI and HPC workloads. The new spec will reuse as much as possible from the original technology to maintain cost efficiency and interoperability. The specification will feature different profiles for AI and HPC; while these workloads have much in common, they are considerably different, so to maximize efficiency there will be separate protocols.