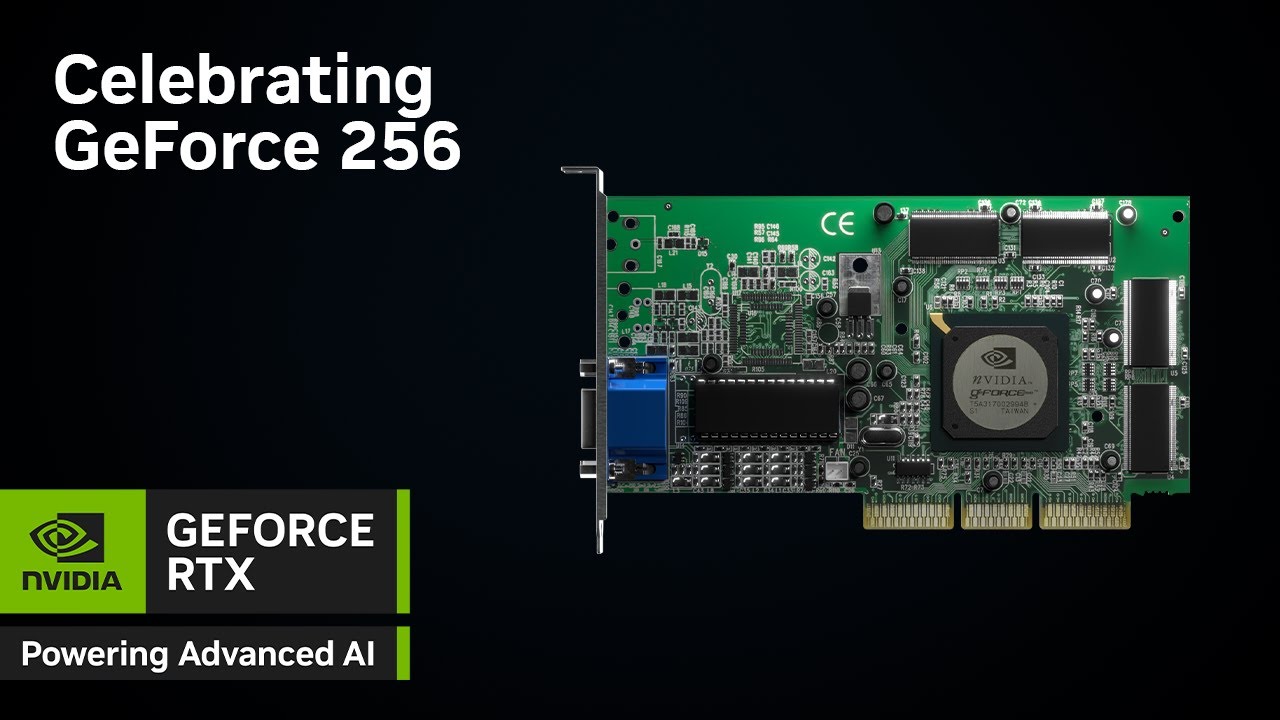

25 years ago today, Nvidia released its first-ever GeForce GPU, the Nvidia GeForce 256. Despite the existence of other video cards at the time, this was the first desktop card to be advertised as a “GPU” — a graphics processing unit. Where there were half a dozen different companies (or more) making video card hardware at the time, many have since been acquired by AMD or Nvidia. Nvidia is celebrating the anniversary with several tie-ins, including a promotional YouTube video, a blog post, and even Nvidia-green popcorn.

Watch On

What makes this particular chip so historic besides being the first GeForce graphics card? It’s not like it was the first graphics cardbut rather that Nvidia made a distinction and called its first GeForce chip a GPU. This was in reference to the unified single-chip graphics processor, and a marketing choice as much as anything to call attention to the fact that it was like a CPU (central processing unit), only focused on graphics.

The GeForce 256 was one of the first video cards to support the then-nascent hardware transform and lighting (T&L) technology, a new addition to Microsoft’s DirectX API. Hardware T&L reduced the amount of work the CPU had to do for games that used the feature, providing both a visual upgrade and better performance. Modern GPUs have all built on that legacy, going from a fixed-function T&L engine to programmable vertex and pixel shaders, to unified shaders, and now on to features like mesh shaders, ray tracing, and AI calculations. All modern graphics cards owe a lot to the original GeForce design, though that’s not really why consumers fondly remember the GeForce 256.

Nvidia now presents the GeForce 256 as the first step on the long road toward AI PCs. It claims that the release “[set] the stage for advancements in both gaming and computing,” though headers like “From Gaming to AI: The GPU’s Next Frontier” are sure to raise some eyebrows. The ever-rising prices and decreasing availability of Nvidia GPUs, particularly on the high-end, reflect a major change over time in Nvidia’s target audience — one that not all fans appreciate, particularly in the context of the original GeForce 256 that launched at just $199 in 1999; it would still only cost around $373 today, after accounting for inflation.

But Nvidia has also pushed the frontiers of graphics over the passed 25 years. The original GeForce 256 used a 139 mm^2 chip packing 17 million transistors, fabricated on TSMC’s 220nm process node. Contemporary CPUs of 1999 like the Pentium III 450 used Intel’s 250nm node and packed 9.5 million transistors into a 128 mm^2 chip — with a launch price of $496. Yikes! For those bad at math, that means the GeForce 256 was less than 10% larger, with 80% more transistors, for less than half the price of a modest CPU.

Today? A high-end CPU can still be found for far less than $500 — Intel’s Core i7-14700K costs $354, for example. It has a die size of 257 mm^2 and around 12 billion transistors, made using the Intel 7 process. Nvidia’s RTX 4090 meanwhile packs an AD102 chip with 76 billion transistors in a 608 mm^2 die, manufactured on TSMC’s 4N node. And it currently starts at around $1,800. My, how things have changed in 25 years!

GeForce 256 marked the beginning of the rise of the GPU, and graphics cards in general, as the most important part of a gaming PC. It had full DirectX 7 and OpenGL support, and graphics demos like Dagoth Moor showed the potential of the hardware. With DirectX 8 adding programmable shaders, the GPU truly began to look more like a different take on compute, and today GPUs offer up to petaflops of computational performance that’s powering the AI generation.