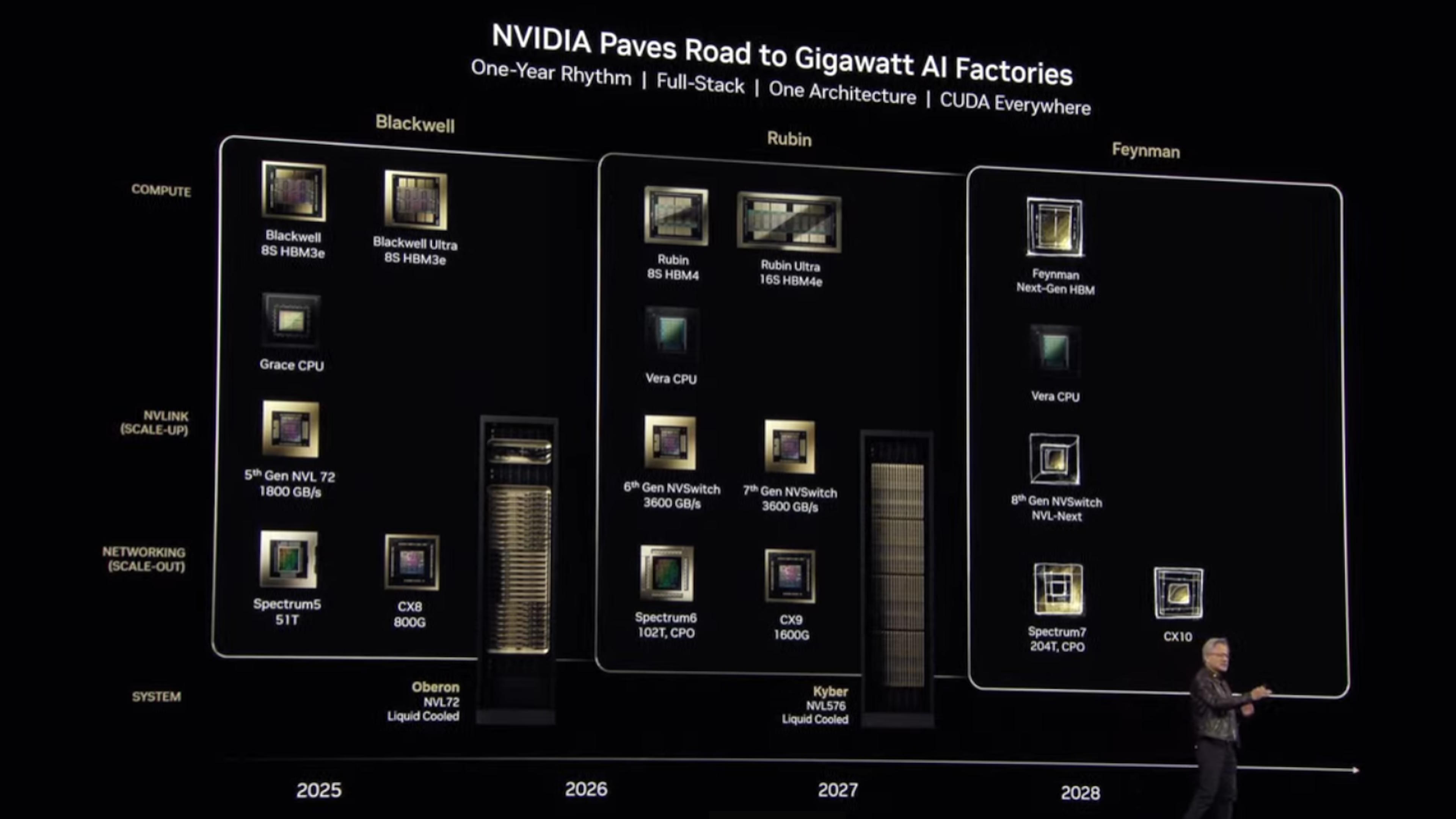

Nvidia announced updates to its data center roadmap for 2026 and 2027 at the company’s GTC 2025 conference today, showcasing the planned configurations for the upcoming Rubin (named after astronomer Vera Rubin) and Rubin Ultra.

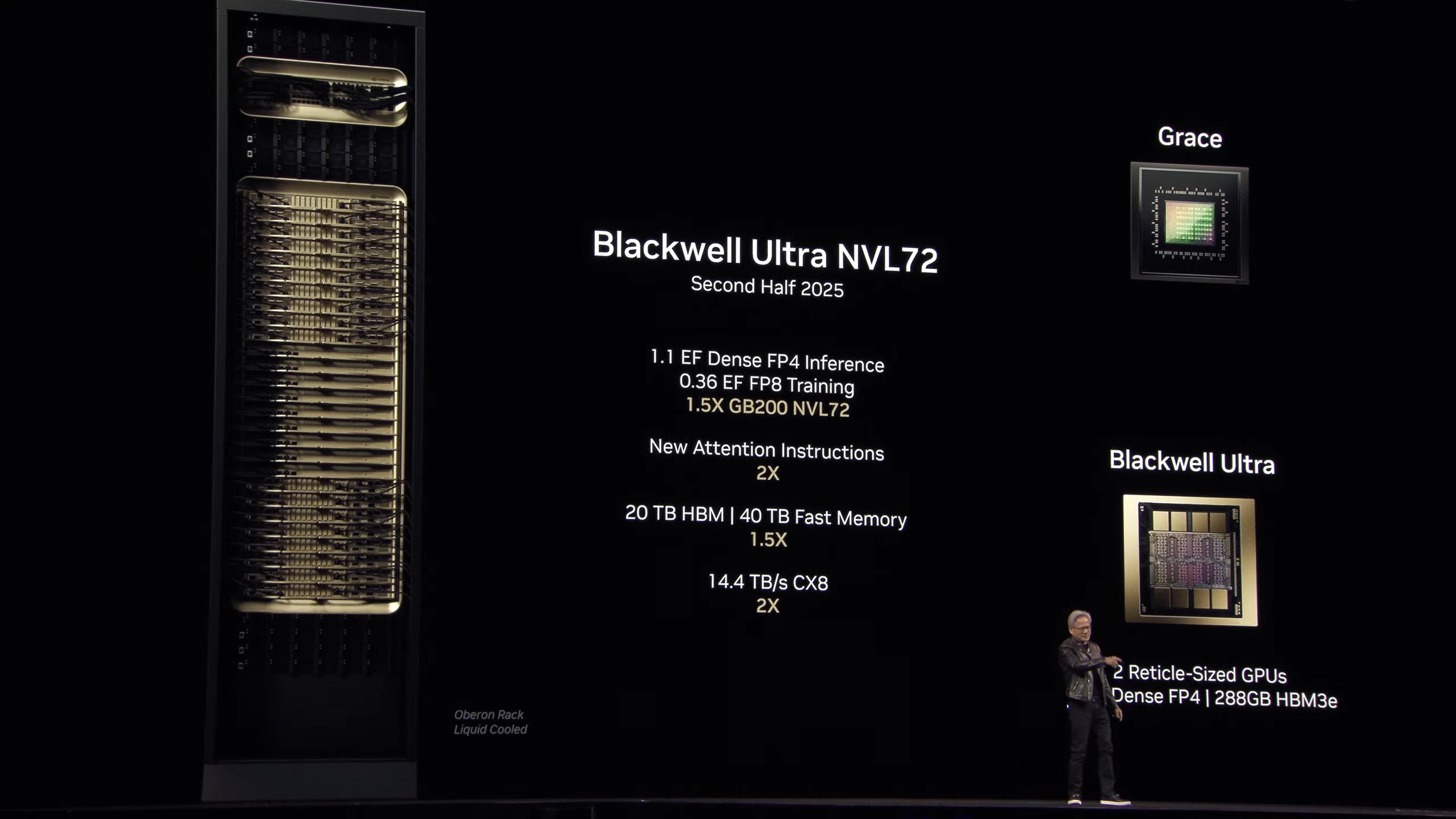

Even though the company has just finished bringing Blackwell B200 into full production, and has Blackwell B300 slated for the second half of 2025, Nvidia is already looking forward to the next two years and helping its partners plan for the upcoming transitions.

One of the interesting points made is that “Blackwell was named wrong.” In short, Blackwell B200 actually has two dies per GPU, which CEO Jensen Huang says changes the NVLink topology.

So even though the company calls the current solution Blackwell B200 NVL72, Huang says it would have been more appropriate to call it NV144L. Which is what Nvidia will do with the upcoming Rubin solutions.

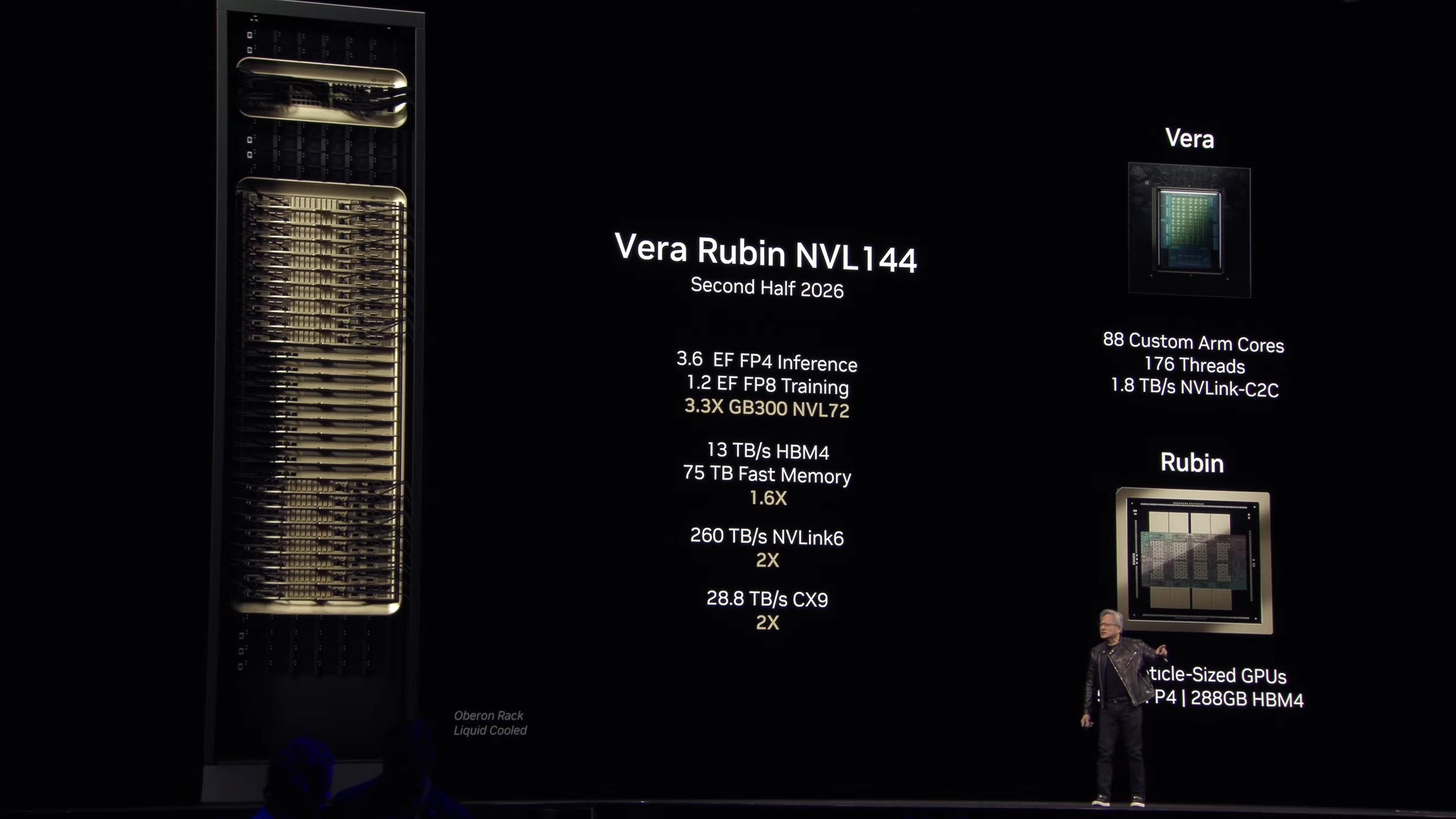

Above we have the Rubin NVL144 rack that will be drop-in compatible with the existing Blackwell NVL72 infrastructure. We have the same configuration data for the Blackwell Ultra B300 NVL72 in the second slide for comparison. Where B300 NVL72 offers 1.1 PFLOPS of dense FP4 compute, Rubin NVL144 — that’s with the same 144 total GPU dies — will offer 3.6 PFLOPS of dense FP4.

Rubin will also have 1.2 ExaFLOPS of FP8 training, compared to only 0.36 ExaFLOPS for B300. Overall, it’s a 3.3X improvement in compute performance.

Rubin will also mark the shift from HBM3/HBM3e to HBM4, with HBM4e used for Rubin Ultra. Memory capacity will remain at 288GB per GPU, the same as with B300, but the bandwidth will improve from 8 TB/s to 13 TB/s. There will also be a faster NVLink that will double the throughput to 260 TB/s total, and a new CX9 link between racks, with 28.8 TB/s (double the bandwidth of B300 and CX8).

The other half of the Rubin family will be the Vera CPU, replacing the current Grace CPUs. Vera will be a relatively small and compact CPU, with 88 custom ARM cores and 176 threads. It will also have a 1.8 TB/s NVLink core-to-core interface to link with the Rubin GPUs.

Rubin Ultra will land in the second half of 2027, and while the Vera CPU will remain, the GPU side of things will get another massive boost. The full rack will be replaced by a new layout, NVL576. Yes, that’s up to 576 GPUs in a rack, each with an unspecified power consumption.

The inference compute with FP4 will rocket up to 15 ExaFLOPS, with 5 ExaFLOPS of FP8 training compute. It’s about 4X the compute of the Rubin NVL144, which makes sense considering it’s also four times as many GPUs. The GPUs will feature four GPU dies per package this time, in order to boost the compute density.

Where the NVL144 Rubin solution has 75TB total of “fast memory” (for both CPUs and GPUs) per rack, Rubin Ultra NVL576 will offer 365TB of memory. The GPUs will get HBM4e, but here things are a bit curious: Nvidia lists 4.6 PB/s of HBM4e bandwidth, but with 576 GPUs that works out to 8 TB/s per GPU. That’s seemingly less bandwidth per GPU than before.

Perhaps it’s a factor of how the four GPU dies are linked together? There will also be 1TB of HBM4e per four reticle-sized GPUs, with 100 PetaFLOPS of FP4 compute.

The NVLink7 interface will be 6X faster than on Rubin, with 1.5 PB/s of throughput. The CX9 interlinks will also see a 4X improvement to 115.2 TB/s between racks — possibly by quadrupling the number of links.

Obviously, there’s plenty we don’t yet fully know about Rubin and Rubin Ultra, but those details will get fleshed out in the future. Data centers need a lot more planning than consumer GPUs, so Nvidia has shared full details well in advance of the products being ready to ship. And it’s not quite done…

After Rubin, Nvidia’s next data center architecture will be named after theoretical physicist Richard Feynman. Presumably that means we’ll get Richard CPUs with Feynman GPUs, if Nvidia keeps with the current pattern.